10.15178/va.2019.146.97-111

RESEARCH

TRUTHS AND LIES ON THE INTERNET: THE NECESSARY AWAKENING OF A CRITICAL AWARENESS BETWEEN TRANSLATION STUDENTS

Verdades y mentiras en Internet: el despertar necesario de una conciencia crítica entre estudiantes de traducción

VERDADE E MENTIRAS NA INTERNET: O DESPERTAR NECESSÁRIO DE UMA CONCIÊNCIA CRÍTICA ENTRE ESTUDANTES DE TRADUÇÃO

Alfredo Álvarez-Álvarez1 Doctor in French Philology from the Autonomous University of Madrid, professor at the University of Alcalá, specialized in the use of ICT and, in particular, social networks in the classroom.

1University of Alcalá, Madrid, Spain.

ABSTRACT

This article aims, first of all, to encourage a critical approach to the Internet information handled by each user, and particularly hopes to awaken in university translation students the need of evaluating the documentation they are accessing and which they will work with in their future careers. This paper develops the project carried out in a general French-Spanish translation lecture of Alcalá University, in which a series of website analysis grids were designed to help students ascertain any website’s trustworthiness.

KEY WORDS: reliability, post-truth, fact checking, analysis grid, Internet, translation, alternative facts

RESUMEN

El presente artículo pretende, en primer lugar, plantear una visión crítica de la información que cada usuario maneja en Internet y, en particular, despertar en los estudiantes universitarios de traducción la necesidad de evaluar la documentación a la que acceden y con la que trabajarán en su futuro profesional. Se desarrolla en este trabajo el proyecto realizado en una clase de traducción general Francés-Español en la Universidad de Alcalá, en el cual se han diseñado una serie de parrillas de análisis de espacios web que ayuden a los estudiantes a determinar el grado de fiabilidad que pueden otorgar a un sitio web.

PALABRAS CLAVE: fiabilidad, posverdad, verificación de hechos, parrilla de análisis, Internet, traducción, hechos alternativos

RESUME

O presente artigo pretende, em primeiro lugar plantear uma visão crítica da informação que cada usuário maneja na internet e, em particular, despertar nos estudantes universitários de tradução a necessidade de valorar a documentação a qual acedem e com a que trabalharam em seu futuro profissional. Se desenvolve neste trabalho o projeto realizado em uma classe de tradução geral Frances-Espanhol na Universidade de Alcalá, na qual foi desenhada uma serie de tabelas de analises de espaços web que ajudem a os estudantes a determinar os graus de fiabilidade que possam outorgar a página web.

PALAVRAS CHAVE: fiabilidade, post verdade, verificação de fatos, tabelas de analises, internet,tradução, fatos alternativos

Correspondence: Alfredo Álvarez Álvarez: Universidad de Alcalá. España.

a.alvarezalvarez@uah.es

Received: 09/03/2018

Accepted: 28/06/2018

Project carried out in the General Translation and Interculturality class (FR-ES), in the Degree in Modern Languages and Translation, of the Guadalajara campus, with the collaboration of the following students: Bárbara Abajas Pérez, Azyadé Allal, Paula Asensi Gómez, Celia Biedma Méndez, Ramata Camara, Sergio Costero Cerrada, Coralie Crétenet, Sofia Da Silva Cunha, Elena De Lio, Ana Francisco Saavedra, Miguel Ángel Gacituaga Esquinas, Victoria Soledad García Hernández, Yorquidia Guzmán Guzmán, Anne-Lise Lerbourg, Raúl Martín Gil, David Felipe Mora Londoño, Pascaline Mpah-Njanga, Amelia Ixchel Ruiz de Gauna Rivera, Julia Salgado Gómez, Fatoumata Sané.

How to cite the article:

Álvarez Álvarez, A. (2019). Truths and lies on the Internet: the necessary awakening of a critical awareness between translation students. [Verdades y mentiras en Internet: el despertar necesario de una conciencia crítica entre estudiantes de traducción]. Vivat Academia. Revista de Comunicación, 146, 97-111. http://doi.org/10.15178/va.2019.146.97-111 Recovered from http://www.vivatacademia.net/index.php/vivat/article/view/1119

1. INTRODUCTION

In the teaching field, as in others, we work with young people used to visiting the different environments of the Network practically without any type of obstacle. Being young people who, more or less immediately, are going to be part of the working world, it is understood that they will have to apply criteria in the work with Internet that are valid and above all not deceptive, in order that they handle in a habitual way only materials that can truly be considered reliable from the content point of view. Stated in this way, the goal seems simple, but in reality it is not easy to achieve. Let’s not forget that the dimensions of the Network have long ceased to form understandable magnitudes for the common user.

Especially from the field of translation, it is important to convey to the students that the sources of documentation must be reliable from start to finish. That is, that the quality of the texts that they will produce in their professional careers depends to a large extent on the rigorous application of labor standards that pass, with a very high priority, by accessing only reliable sources.

However, this task does not seem simple, since in fact it is, as is often the case with technologies, that students put aside some acquired uses in a fairly disorganized learning of the use of the Internet and accept that it is necessary to perform a critical navigation every time they access the network.

In order to optimize their capabilities, a project was created that aimed to create several tools that could determine the reliability of a website. This project was carried out in the subject of General Translation and Interculturality, in the French-Spanish version, of the 3rd year of the Degree in Modern Languages and Translation, at the University of Alcalá (2016-2017 academic year).

1.1. Truths and lies on the Web

What has been said before reinforces the fact that the Internet is a space that must be approached with caution, since it is not an innocent environment at all. It concentrates all types of interests (confessable, less confessable and totally obscure) that often find in advertising a sufficient argument to fill our mail, or our profiles in different social networks, of proposals that, according to the information that we are leaving in many of the spaces we visit, is supposed to awaken our interest.

In any case, we could say that advertising is not the greatest evil with which we can find ourselves, if we do not establish filters in the information that we seek or that we obtain.

We must be aware that navigating means first of all making decisions (each of the clicks of our mouse represents one of them) that should be resolved in a time/reliability equation. However, although never in history has information and knowledge been so within reach of everybody, it can be said that there has not been another period in which the possibilities of manipulation have been so powerful and evident. After all, the Internet is nothing more than a reflection of life, showing all facets of mankind. On the other hand, the ease of access to information and knowledge makes many unsuspecting people to think that accessing knowledge implies de facto having that knowledge. Far from the truth, to think that is like convincing oneself that to see a pilot operate an airplane automatically turns us into specialists of the aviation. This is what the French philosopher Michel Serres (2012) calls presumption of competence. It is necessary to have a clear conscience that for anyone who intends to transmit a deceptive, fallacious or biased message, the Internet is the ideal environment because it is capable of reaching a huge number of people in a minimum amount of time. In fact, manipulation is often accompanied by new apparent concepts that, for the uninitiated, tend to have a patina of modernity (or postmodernity) that makes them more easily consumable.

In areas such as political communication, the intentionality of the message can be seen more clearly. Recently, some of these “new-old” ideas have begun to circulate normally. Let’s see two significant examples. The first is the so-called post-truth. This concept has been defined as “a cultural and historical context in which the empirical testing and the search for objectivity are less relevant than the belief in itself and the emotions it generates when creating public opinion currents” (Torres, ND). Actually, it is a euphemism to hide another form of lying, with the proviso that they attempt to blur the boundaries between truth and falsehood, that is, in the words of Mejia (2016) “defines a certain attitude of contempt towards the idea of truth , which is seen either as an illusion or at least as something that has no use “. For Flichtentrei (2017), post-truth is “false, absurd, unscientific; but frequent and powerful to flee from the TRUTH with capital letters “. From his point of view, he considers how we react to evidence contrary to our beliefs and proposes 7 options:

– Ignore the data

– Denying the data

– Exclude the data

– Suspend the trial

– Reinterpret the data

– Accept the data and make peripheral changes in the theory

– Accept the data and change the theory.

In any case, as suggested by Torres, basically, the post-truth neologism “serves to indicate a tendency in the creation of arguments and discourses [...] in which objectivity matters much less than the way in which what is stated fits with the belief system that we feel and that makes us feel good”.

Another neologism that has taken shape not long ago and that seems to show enough vigor (again) comes from English and is none other than alternative facts, a term that CNN Spanish (2017) attributes to Kellyanne Conway, Donald Trump’s adviser, on the occasion of the calculation of people who attended the investiture of the North American president and the comparison with other investiture ceremonies to which, according to the American press, a greater number of citizens had attended. Be that as it may, the truth is that the so-called alternative facts do not stop being, simply, a lie. However, they have the characteristic, not insignificant, that they are usually accompanied by a powerful media device that will do the unspeakable because the citizen, especially the gullible or the unfailing supporter, has an ad hoc explanation that allows him to accept emotionally and irrational that which, in the end, you want to believe.

In fact, the term post-truth has been known for years in psychology (Torres, ND) and is related to “the intellectual sacrifices we accept in order to maintain a standing belief system that has taken root in our identity”, that is, For example, when a president like Donald Trump decides to communicate with his voters through his Twitter account instead of using the usual channel of the media, he is short-circuiting a possibility of verifying what he says or proposes. None of this is innocent and, therefore, it is understood that the objective is to create a context in which the truth, the verification of facts or the presentation of evidence cease to be valued, with which we will have what has been given in calling the perfect storm.

1.2. And, the digital natives?

The irruption of technologies in our world has taken place several decades ago, which means that there has been time for some generations of so-called digital natives to have been born and grown up surrounded on all sides. Now, this finding necessarily lead us to conclude that young people are better able to go into the stormy Internet worlds than previous generations? Not necessarily because, although it is legitimate to recognize a mastery of the technological tool, it is not less to realize that they make an elementary and rather unreflective use, from which can arise many problems derived from the (mis) use of the Internet.

Therefore, when faced with future professionals (in my case, of translation), teachers must be aware that one of our most relevant missions is to make them aware that a controlled and reasonable use of the Internet and its resources will give excellent results. results, while a disorderly use may cause many problems. Now, in what way to undertake this process of awareness? Who signs these lines considers that the development of a critical awareness among these young people is absolutely essential. Obviously, not only to be future responsible professionals, but also for one’s own life.

In the case at hand, it is about students having tools that they can use in a simple way and whose use gives way to a new look at the contents of the Network. We agree with Fornas (2003) in which any Internet user must have a series of guidelines that allow them to evaluate the information to determine the quality. In fact, he proposes a battery of tools by means of which to apply a minimum evaluation criteria: authority, credentials, intelligibility of the message, independence, usability, impartiality, temporality, utility and sources of origin of the document.

In general terms, this set of tools seems acceptable to us, although it is intended for application to professional information, coming from qualified means. In the case at hand, the translation has some particularities that should be noted. In the first place, this activity requires very diverse sources of documentation and, therefore, it is more complicated to prepare any type of tool. On the other hand, in the field of specialized translation we can find an infinity of subjects whose characteristics will be partly coincident, in almost everything or in practically nothing, for which a very versatile tool is called for (something very difficult to design) or various tools capable of framing the different types of sites that can be found on the Net.

1.3. The prolegomena of the project

In order to approximate the students to the project from a practical perspective, a first approach was made by presenting a grid for the analysis of lexicographic resources (Álvarez, 2007), with which 21 dictionaries and glossaries were evaluated. This milestone was considered very relevant, since the process of evaluating a dictionary (based on our case in 25 items) placed the students in a context of necessary reflection about the elements presented by each lexicographical resource, prompting them to reflect on the relevance or of them.

In a second moment, the Decodex (1) tool was incorporated for its study and analysis, designed by the newspaper Le Monde and put into operation in February 2017. It should be specified that its creation and operation are subject to a letter(2) or statement of principles, formulated in 10 points, and in which they set out their basic operating principles. It also includes the guide called Kit to detect false information, intended for teachers(3), in which clues are provided for working with students.

From this moment, the students were asked to do a search of applications or websites that offer the possibility of data verification, following the Decodex model as much as possible. Accordingly, a list was prepared, from which we collect some examples:

– Majestic.com (4), inspects and maps the Internet, and has created the world’s largest commercial link intelligence database. This Internet map is used by search engine positioning services (SEO), new media specialists, associate managers and experts in online marketing, with various purposes related to the prominence on the Internet, such as the creation of links, the administration of reputation, the development of Internet traffic, the analysis of competition and the monitoring of news. According to his own information, Majestic constantly reviews websites and visits around one billion URLs per day.

– Contrefacon.fr (5) defines itself as “La première plateforme sociale de lutte contre les sites de contrefaçon”. More especially, the site declares the following: “Automated, perfectly referenced and anonymous, counterfeit sales sites use web strategies that are so perfected that they quite often lead to scamming the consumer”(6).

– Hoaxbuster (7) French page in which web users send articles to the editorial team of Hoaxbuster, which will verify the information that is disclosed (check the source, contact the people / institutions that write the articles). With all these verified articles, a database is made that differentiates the fake articles from the real ones, and Internet users can search the Hoaxbuster page for the content of any article to know if it is false or true.

– Viral Inspecteur (8). This page is a section of the Metro newspaper (Belgium) that verifies the veracity of news. In this you can access several articles that deny false information.

– Désintox (9). Libération newspaper data verification tool.

– WOT, Web of Trust (10). It is an extension that can be installed in Chrome, Firefox or Internet Explorer, but there is also an app for mobiles. Check the reliability of the pages consulted (reliability of the information, the author of the page ...).

(1) Véase http://www.lemonde.fr/verification/

(2) Véase http://www.lemonde.fr/les-decodeurs/article/2014/03/10/la-charte-des-decodeurs_4365106_4355770.html

(3) See http://bit.ly/2F5uDRW

(4) See https://es.majestic.com/

(5) See https://www.contrefacon.fr/

(6) Source: Own translation.

(7) See http://www.hoaxbuster.com/

(8) http://journalmetro.com/opinions/inspecteur-viral/

(9) http://www.liberation.fr/desintox

(10) https://www.mywot.com/

It is worth clarifying that, at the present time, the processes of verification of data are still incomplete and, therefore, it is necessary to think that the measurement mechanisms are at an initial stage. This means that many users are not aware of the importance of the topic or the dimensions that the problem can reach. These data verification applications do not yet have enough coverage to be able to analyze any type of page. On the other hand, we find the language barrier, for which there is no solution at the moment, since each site usually analyzes pages written in their own language. For this reason, it is possible to think that in the immediate future more and more resources of this type will be appearing that allow the users at least to have an approximate idea of the veracity of the information that they use.

2. OBJECTIVES

In accordance with the above, the execution of the project was proposed in the class, with the following objectives:

– To prepare a classification, as consistent as possible, of the set of sites on the Internet, which can be used as sources of documentation by a professional translator.

– To design, if possible, a series of proposals of tools to determine the level of reliability of the resources.

The process should be as collaborative as possible, so that the final result were, effectively, the result of the cooperation of the entire group. Bearing in mind that, as the students referred, none of them knew the subject, they judged the proposal Interesting and useful for their future profession. This led to a fairly enthusiastic participation, which led to the deadlines being met comfortably.

3. METHODOLOGY

The research consisted, in its first phase, in identifying categories of pages to which apply differentiated analysis models. From there, each of the subgroups was asked to propose a classification of Internet sites, a classification that we reproduce below:

– Information pages. All those websites whose first objective is to provide information, whether free, paid, restricted, etc., are included. Also, those that offer the information by any means, text, audio or audiovisual, and that are updated with any periodicity (daily, weekly, biweekly, monthly ... etc.) are included.

– Institutional pages. Websites supported by institutions of a local, regional, national or supranational nature, whatever their content, are proposed.

– Academic Pages (U / IES / Moocs). In this case, the webs that are supported by teaching centers, such as universities, secondary schools that teach regulated education, are included, but some of these teachings have also been included, such as the Massive Open Course (Mooc’s).

– Blogs / Wikis / Personal pages. Conform this section those websites that, because of their content, tend to be supported by people at particular level.

– Social networks. In this case, basically 3 (Twitter, Facebook, Instagram) are included, because, due to their number of users, they have greater acceptance.

– Browsers This software, which we use so habitually, should make us reflect because in general we assume they are “innocent”, in the sense that they allow us a discreet navigation without leaving a trace. That is not true and it is important to know that some of them, not exactly the least used ones, keep many of our data (search history, for example) without us being aware of it. If we want to use the “private browsing” mode, which would allow us, in theory, to make tours in a reserved way and without “witnesses” that keep our data, the procedure to do it clashes with all the laws imposed by the speed on the Internet, with which it is easy to defect.

This group was left out of the analysis because, although browsers are at the heart of most of searches on the Internet, does not represent itself as a repository of direct information, but which has comes from second or third sources.

– Unclassifiable or difficult to classify pages. It includes, in this case, websites such as El Rincón del vago, whose contents are not verified and whose reliability is unknown. Also Wikipedia that, being a collaborative space, anyone can edit and publish without there being a proper verification of data since, in theory; it is done by volunteers whose knowledge of the different subjects is not accredited. Likewise, other types of pages such as sales of any product are included in this section.

Like the previous one, this group was discarded from the project, basically due to the extreme difficulty (or impossibility in many cases), not only to determine the veracity of its contents, but simply to accredit its authorship.

Once the classification was done, the groups of sites were distributed to move on to the next phase, which was the preparation of tools for analysis.

4. DISCUSSION / RESULTS

A total of five evaluation grids were prepared, corresponding to the five types of web pages that were intended to be evaluated. All of them presented a series of common items, as expected in view of the process, plus a set of specific items.

In the bases of the proposal those elements that were considered necessary for all of them were included. For the establishment of these concepts, criteria arising from the traditional culture of the book were applied. And this is because we understand that there is no imperative that prevents its application and, also, because these criteria have proven their effectiveness over the centuries. They are the following:

Site identification

It is very common to find a web page, personal, corporate..., which lacks a basic identification, that is, a person, an institution... This circumstance does not help to establish a relationship of trust with it. If we do not know who is providing us with information, it will hardly be entirely credible. On the other hand, content provided by an individual, by a group of people (institution, organization, association ...) or an entity of whatever type will not be considered in the same way. Likewise, the page must have a contact form that allows the user to contact someone to comment, ask..., etc.

The identification of each site is necessary for obvious reasons.

Authority

We understand by such an individual, collective, institution..., that can accredit sufficient knowledge about the subject that is presented. In other words, if the content is presented by an individual who holds an ad hoc qualification, it will have a better consideration than another individual who does not present any type of accreditation. The same can be said of an institution/organization/association; when it comes to specialized structures, they will obviously have a level of credibility in their proposal that others will lack.

Accessibility

In this case, we refer to the type of page, which can be freely accessible, paid, restricted..., etc. In general, when it comes to lexicographical sources, the free offer that can be found on the Internet is quite broad, but there are also other resources that need a subscription, purchase..., etc.

Upgrade

One of the circumstances that, unfortunately, is repeated with a certain frequency, is the fact that content or webs are published, which at a certain moment cease to have an interest for their authors. In these cases, the proper would be to unpublish its content or the same web. However, this fact hardly occurs and it is more usual for information to enter an obsolescence stage. Naturally, depending on the contents, this circumstance may be more or less relevant. But, the fact that no update occurs and, what is more important, without the user being aware of it, can easily deceive, which can be said to be a situation of lack of objectivity, and even of possible deception by omission, which is to the detriment of the user. Therefore, the fact that a web page is updated or not, coupled with the no less important fact that the user knows in a reliable way that, in fact, an update occurs and, if possible, at what frequency, it turns out to be of enormous importance.

Documentary references contrasted

Undoubtedly, it is convenient to make a basic distinction between users and make a difference, if necessary, between general or generalist user and professional user. Certainly, rigor in content and information must be required at all times, but in the case of professional environments, such as translation, for which specialized or highly specialized information is needed, the filters needed are of great precision Otherwise, and depending on the subjects, the risk of possible inaccuracies is not less. For this reason, it is very important to use a contrasted material and, therefore, to resort to equally contrasted documentary sources, whether primary or secondary . And this, not only in regard to thematic resources but also to the lexicographical ones. The latter have undergone a profound transformation with respect to traditional paper dictionaries, especially (Álvarez, 2010), with the introduction of hypertext, which leads to internal links converting dictionaries, glossaries, lexicons ..., into a virtually unlimited information fund. For all these reasons, the possibility that a web space, be it lexicographic or thematic, offers contrasted documentary references provides, without any doubt, an important and necessary level of reliability.

Usability

With this term we refer to what Fornas (2003) calls “the ease of use, whether it is a web page, an ATM or any system that interacts with a user”. In this sense, elements such as colors, the distribution of contents, the typology of menus, help options, the web map..., etc., help to better understand and greatly facilitate navigation. If, in the case, of the thematic contents, a search engine is present, the user will undoubtedly feel rewarded.

Level of language

The language, the written expression, for future translators is a subject of great relevance, but not only because in their professional career they will be required a broad domain, but because their tool of work is, and will be, the word. On the other hand, contents that are presented in a poorly written web space, or with orthotypographic errors, are not the best letter of introduction that offers an optimal level of reliability. Therefore, it is necessary to demand from those who publish on the Internet standards that are at least minimally high in terms of expression and wording, which is not always evident. On the other hand, even if it is very obvious, we must discard any text that is not written from clear criteria of respect or that includes disqualifications, insults... etc.

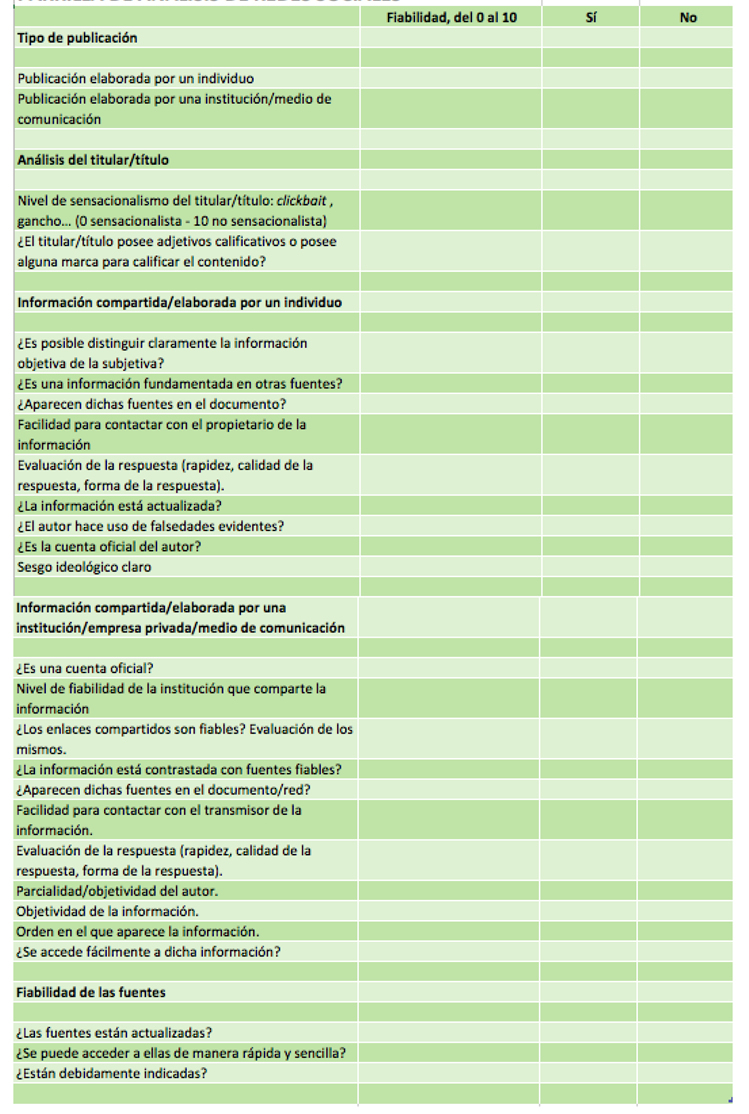

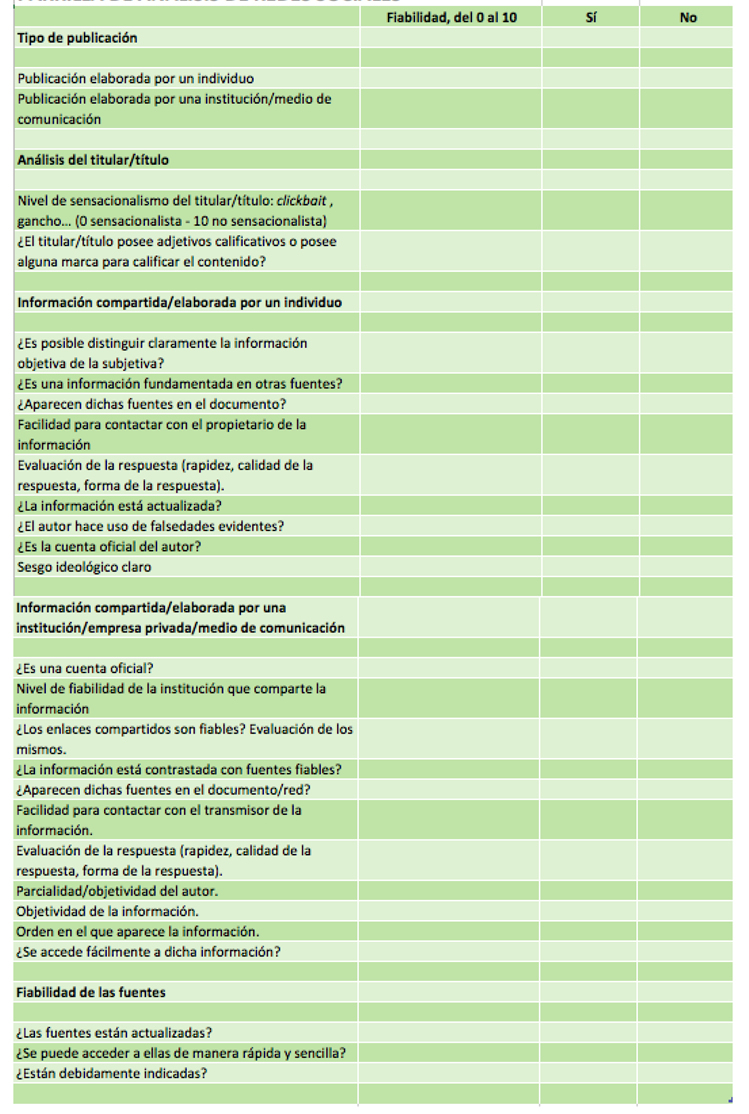

From these points, all the grids included those concepts that were considered specific for each of the elements of the classification of Internet sites. In order to be able to offer a more effective approach each grid is equipped with a numerical rating for each of the items included in each table so that, automatically being designed in Excel, in addition to a conceptual assessment is available of a numerical rating, on a maximum of 100 points. The set of tables offers a total of 200 points of analysis, which is equivalent to an average of 20 points per grid and per group of Internet sites, which gives the analysis, we believe, a good level of reliability. The model developed for the analysis of Social Networks is provided in Annex I.

ANEXO I

PARRILLA DE ANÁLISIS DE REDES SOCIALES

Source: Own elaboration.

5. CONCLUSIONS

The use of the Internet, especially in regard to the documentation process of future translators, requires a very careful approach to the sources. These must be, first and foremost, reliable, for which it is essential to use tools to determine the level of reliability. At present, these tools are in most cases in the process of development and are offered for very specific topics, among which the information stands out. In fact, it could even be said that not a few times the level of reliability of the tools themselves is unknown a priori, mainly because their creators do not always specify on what basis they are supported.

In this project a series of tools aimed at assessing the reliability of a series of Internet environments were developed, with a view to developing among students a critical awareness regarding the contents of the Network. The result has been materialized in five grills of analysis of that many web spaces that contain those points that are considered more significant when granting a level of confidence to the information.

The project is considered completed in its first phase, which was to be aware of the need to submit to analysis the contents accessed on the network. The next stage will be to test each of the grills created in the environments and do it on a significant number of pages to see what level of effectiveness they show and if it is necessary to modify them and in what sense to finally create an application that allows them to be put online.

The difficulties encountered in the process have to do mainly with the little or no knowledge on the part of the students of the dealt topic, which made the task of concretion of concepts that should be dealt in each one of the grills, since in the use that they make the Network any critical apparatus is practically excluded. Another of the difficulties encountered has been to give coherence to the set of grills, necessary on the other hand, to apply the same principles to all of them. In both cases, they have been overcome by using the previous models, which were referred to in previous sections.

REFERENCES

1. Álvarez A (2007). Estudio de los recursos Internet aplicados a la enseñanza y a la traducción del francés, (tesis doctoral). Universidad Autónoma de Madrid. Recuperado de https://repositorio.uam.es/handle/10486/2353

2. Álvarez-Álvarez A (2009). Nuevas Tecnologías para la clase de Francés Lengua Extranjera. Madrid: Quiasmo Editorial.

3. Álvarez-Álvarez A (2010). Modelos de análisis para recursos lexicográficos en línea, en Sendebar, Revista de la Facultad de Traducción e Interpretación, 21, 181-200.

4. Álvarez-Álvarez A (2014). Actitudes y valoración en el uso de una red social para el aprendizaje del Francés Lengua Extranjera, en Revista de Comunicación Vivat Academia, 128, 92-106.

5. Álvarez-Álvarez A (2016). Detección de necesidades de aprendizaje mediante el uso de una red social y del vídeo en una clase de Francés Lengua Extranjera, en Revista de Comunicación de la SEECI, 39, 1-16. Recuperado de http://www.seeci.net/revista/index.php/seeci/article/view/326

6. CNN (2017). Asesora de Trump: Casa Blanca citó “hechos alternativos” sobre asistencia a toma de posesión, en CNN español. Recuperado de http://cnnespanol.cnn.com/2017/01/22/asesora-de-trump-casa-blanca-ofrecio-hechos-alternativos-sobre-asistencia-a-toma-de-posesion/#more-371940.

7. Dunbar R (2010). How many friends does one person need? Dunbar’s number and other evolutionary quirks. Faber & Faber.

8. Flichtentrei D (2017). Posverdad: la ciencia y sus demonios, in IntraMed Journal, 6(1).

9. Fornas-Carrasco R (2003). Criterios para evaluar la calidad y fiabilidad de los contenidos en Internet, in Revista española de Documentación Científica, 26(1).

10. Mejía DA (2016). Editorial. La investigación y las decisiones sociales, in Voces y Silencios: revista Latinoamericana de Educación, 7(2):1-3.

11. Nepomiachi R (2000). El cartel y la función del extimo. Ornicar? Digital. Recuperado de http://wapol.org/ornicar/articles/153nep.htm

12. Serres M (2012). Petite Poucette. Paris: Éditions Le Pommier.

13. Tamir D, et al (2012). Disclosing information about the self is intrinsically rewarding, in PNAS, 109(8038).

14. Torres A (ND). Posverdad (mentira emotiva): definición y ejemplos. Recuperado de https://psicologiaymente.net/social/posverdad

AUTHOR

Alfredo Álvarez Álvarez: He is a professor of French Language at the University of Alcalá (Madrid). PhD from the Autonomous University of Madrid, with a thesis on Internet resources for learning and French translation, his research works on the integration of technologies in class. On the subject he has made numerous publications, of which New Technologies for the French class stands out: theory and practice. Some of its publications are: Attitudes and valuation in the use of a social network for the learning of the French foreign language, 2014, in Vivat Academia magazine, Vol. 128, and the book chapter The use of social networks in the creation of a narrative: learning’s on the internet, in the “Current communication book: social networks and 2.0 and 3.0”, (2014) McGraw Hill, Detection of learning needs through the use of a social network and video in a class of French Foreign Language, 2016 , in Journal of Communication of the SEECI, vol 39.

a.alvarezalvarez@uah.es